What free AI image generators are actually doing with your selfies

I watched a colleague upload 20 selfies to a free AI headshot generator. “It’s just for LinkedIn,” she said.

The results were impressive: professional lighting, perfect background, polished look. She downloaded her new profile picture and moved on with her day.

What she didn’t realise was that she’d just donated her face to a dataset that would outlive her career, her reputation, and possibly her ability to prove what’s real.

I bet, you’ve seen them everywhere. Instagram ads promising to turn you into a Renaissance painting, a cyberpunk character, or a model standing in winter snow. The results are genuinely compelling. Your face, but elevated. Artistic. Shareable.

These apps are exploding in popularity. Millions of people are uploading their faces daily. The growth is staggering because the value proposition seems obvious: upload a few selfies, get beautiful AI-generated images back. Free, instant, magical.

But here’s what’s actually happening behind that “Generate” button…

#1 Your Face Is Now Training Data Forever

When you upload your face to these free tools, you’re not just getting images generated. You’re donating your biometric data to train future AI models.

Here’s how it works: The system doesn’t just process your photos and forget them. Your facial features, expressions, angles, and characteristics become part of the training dataset.

Every selfie you upload teaches the AI what “you” looks like from multiple perspectives.

Then it gets worse. That AI can now generate thousands of images of “you” in contexts you never chose.

You at a protest. You at a nightclub. You shaking hands with politicians you’ve never met. You in compromising positions. You in places you’ve never been.

And those generated images? They feed back into the training data for the next version of the model. Your synthetic doubles multiply exponentially.

Each generation creates more data points, more variations, more “versions” of you that never existed. This isn’t a future problem. It’s happening right now.

Your face, once uploaded, becomes an infinite resource that compounds over time. You can delete your account. You can revoke permissions.

But you can’t un-train a model that’s already learned what your face looks like from 47 different angles.

#2 Biometric Signatures Are More Permanent Than Passwords

Most people think they’re just uploading photos. They’re not.

These systems extract the mathematical signature of your face. The precise geometry of your features. The distances between your eyes, the curve of your jawline, the unique pattern of your facial structure.

This isn’t like a password you can change if it gets compromised. This isn’t like a credit card you can cancel.

Your facial geometry is biologically fixed. Once it’s captured, catalogued, and embedded in an AI system**, it’s permanent.** And it’s incredibly valuable.

Facial recognition systems, security databases, identity verification platforms - they all rely on these mathematical signatures.

When you hand yours over to a free app, you’re giving away something more intimate than your fingerprint and more permanent than your social security number.

The terms of service you didn’t read? They often include clauses that grant the company perpetual, worldwide, royalty-free rights to use your biometric data.

You signed away ownership of your face for a few AI-generated portraits. Unfortunately, you can’t get it back.

#3 The Risk Profile You Never Created

Insurance companies are getting smarter about assessing risk. So are employers. So are background check services.

Now imagine this scenario: An insurer is evaluating your health insurance application. They run your face through an image search. Up come AI-generated images of “you” at extreme sports events you never attended, nightclubs you’ve never been to, locations that suggest lifestyle risks you don’t actually have.

These images were generated by an AI that learned your face. They look real. They’re indexed by search engines. And they create a risk profile that has nothing to do with your actual behaviour.

Or consider employment screening. A company runs your name and face. The algorithm finds dozens of AI-generated images of you in contexts that raise red flags. Political rallies. Controversial events. Associations you never had.

You can protest that these aren’t real. But how do you prove it?

The images exist. They’re timestamped. They’re shareable. And to an algorithm scraping the internet to build risk profiles, they’re just data points.

The scariest part? You don’t even know what images exist. You don’t know what contexts your AI-generated face has been placed in. You don’t know what profiles are being built using synthetic data about you. And you have no way to find out.

#4 Death by Ten Thousand Generated Images

Instagram AI model Milla Sofia

Instagram AI model Milla Sofia

Everyone talks about deepfake videos. The ones where a celebrity’s face is swapped onto someone else’s body. Those are dramatic and obvious.

But the real threat isn’t one viral deepfake.

It’s 10,000 AI-generated images of you, scattered across the internet like digital shrapnel.

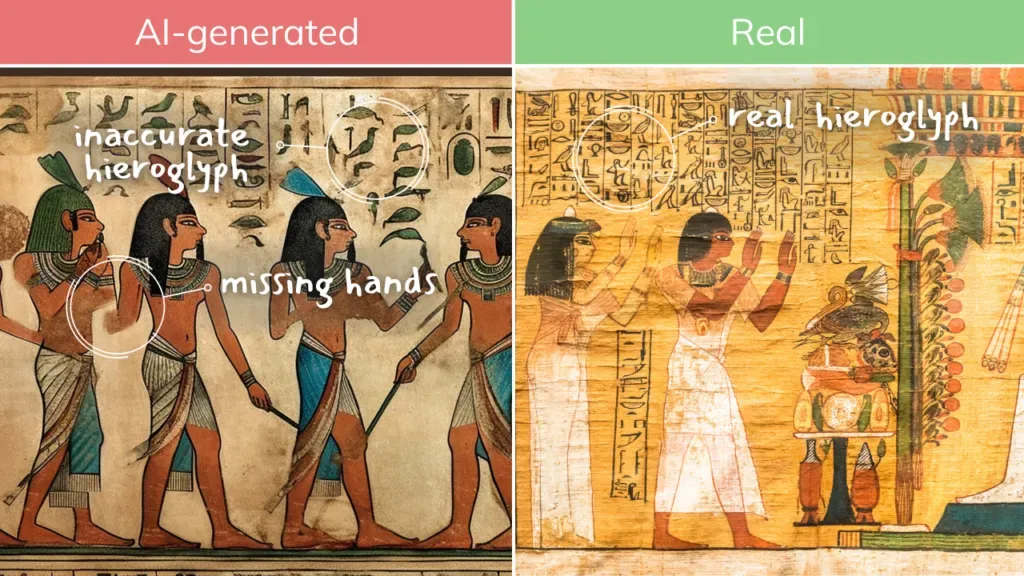

Viral AI Snow Photoshoot. Source: Perfect

Viral AI Snow Photoshoot. Source: Perfect

Google yourself in five years. What comes up?

Photos of “you” that you never took. In places you’ve never been. Doing things you’ve never done. Wearing clothes you don’t own. Standing next to people you’ve never met.

Each image is individually unremarkable. But collectively? They pollute your digital presence beyond recognition. They bury the real you under an avalanche of synthetic versions.

Search engines index these images. Social media platforms distribute them.Other AI systems scrape them and incorporate them into new datasets. The multiplication is exponential.

And here’s the worst part:

You can’t control it. You can’t remove it. You can’t even track where it’s all going. These images are generated, posted, shared, and archived faster than you could ever hope to contain them.

Your digital identity fragments into a thousand synthetic versions of yourself, and you lose the ability to define who you actually are online.

#5 When Nothing Can Be Proven Anymore

We worked on translation systems for international business meetings. One thing we’ve learned: trust collapses when communication becomes unreliable.

The same is happening with images. Once your face is in AI training data, every photo of you becomes suspect.

Did you actually attend that event? Did you really send that photo? Was that really you in that video?

The evidentiary value of photography is collapsing. And it’s happening faster than our legal systems, our social norms, or our personal relationships can adapt.

This has real consequences:

- In legal disputes, photographic evidence becomes meaningless when any image could be AI-generated.

- In professional contexts, your reputation becomes unfalsifiable because any photo can be dismissed as synthetic.

- In personal relationships, trust erodes because you can never be sure what’s real.

The irony is brutal:

The more realistic AI-generated images become, the less we can trust real images. And once your face is part of that system, you lose the ability to prove what’s authentic.

Someone shares a photo of you doing something embarrassing? You can claim it’s AI-generated, but so can everyone else about their real photos.

The defence becomes meaningless because it’s universally available. We’re entering an era where “that’s not really me” is both a legitimate defence and a meaningless excuse. And once you’ve uploaded your face to free AI generators, you’re stuck in that ambiguity forever.

#6 The Environmental Bill You’re Not Seeing

Here’s something most people don’t think about when they upload selfies to generate AI art: the energy cost.

Every AI image generation requires significant computational power. Training the models requires even more. And when millions of people are uploading faces daily to create aesthetic portraits, the environmental impact compounds rapidly.

Studies suggest that generating AI images can consume more energy than other AI tasks.

The models are large, the processing is intensive, and the infrastructure required to serve millions of users simultaneously is staggering.

You’re not just uploading your face for free portraits. You’re contributing to carbon emissions at scale, usually for purely aesthetic vanity projects.

That beautiful AI-generated image of you in winter snow? It cost more energy than most people realise.

The companies running these free services aren’t transparent about their environmental impact. They don’t disclose the carbon footprint of each generation. They don’t tell you that your 20 selfies might have contributed more emissions than leaving your laptop running for a week.

And because the service is free, users feel no friction. They generate dozens, hundreds, thousands of images. Each one burning energy. Each one contributing to a collective environmental cost that nobody’s tracking.

Vanity has a price tag. We just externalised it to the planet.

What Actually Respects Your Data

At VideoTranslatorAI, we’ve spend my time building translation and communication tools that process sensitive information in real-time.

One thing I’ve learned: If a tool is free and requires your biometric data, you are the product.

The alternative exists.

- Tools that process data locally.

- Systems that don’t retain your information.

- Platforms that are transparent about data usage and give you actual control.

But they’re rarely free, because respecting privacy and data ownership has real costs. The companies that build them can’t monetise your face. They have to charge for the service itself. That’s the trade-off most people don’t see when they upload to free AI generators.

You’re not getting something for nothing. You’re paying with something far more valuable than money: permanent, irrevocable access to your biometric identity.

The Choice You Didn’t Know You Were Making

Every time you upload your face to a free AI tool, you’re making a choice. But most people don’t realise what they’re choosing.

You’re choosing to:

- Make your face available for infinite synthetic reproduction

- Donate your biometric signature to datasets you’ll never see

- Risk algorithmic profiling based on images you never created

- Pollute your digital identity with synthetic versions of yourself

- Eliminate your ability to prove what’s real

- Contribute to environmental costs you can’t measure

All for a few aesthetically pleasing portraits that you could have commissioned from an actual artist, or generated using tools that respect data privacy.

The scariest part? Most people will never know the full consequences of this choice. The impacts are distributed across years, invisible, and impossible to reverse.

What I’m Asking You to Consider

I’m not saying never use AI image generation. I’m saying understand what you’re actually trading. Your face is yours. Once you hand it over to systems designed to extract value from it, you lose control permanently.

Before you upload those selfies to the next trending AI app on Instagram:

- Read the terms of service, specifically around data usage and biometric rights

- Check if the tool processes locally or sends your data to external servers

- Ask whether the company is transparent about training data usage

- Consider if the aesthetic output is worth permanent loss of control over your likeness

Because once your face is in the training data, it’s not coming back.

And in five years, when you Google yourself and find a thousand images of a person who looks exactly like you but isn’t, you’ll understand exactly what you traded for that free AI portrait.

Learn about how VideoTranslatorAI handles your documents’ privacy and security by dropping your messages here or send us an email at hello@videotranslator.ai.