Your AI translator is lying to you

You paste a sentence into Google Translate. The output looks perfect. You ship it to your global team.

What you don’t see: The AI just assigned gender to a job title that was neutral. It flattened a cultural idiom into corporate speak. It picked formal tone for a casual message, making your brand sound robotic.

AI translation isn’t just converting words—it’s making judgment calls about gender, culture, and tone. And those judgments carry bias.

These aren’t small glitches. They’re systematic patterns that reshape your message every time you translate.

Here are 6 biases hiding in every translation, and why they matter more than you think.

Bias #1: Gender Bias

AI assigns genders to professions with zero context.

“The doctor” becomes “he.”

“The nurse” becomes “she.”

Every time.

The pattern holds across platforms. Your carefully crafted job description that avoids gender markers in English gets translated into gendered language in French, Spanish, or German. The algorithm doesn’t ask which gender you meant—it picks based on stereotypes embedded in millions of training examples.

The impact? You reinforce stereotypes in every translated sentence.

Your inclusive English content becomes gendered in Spanish, German, or French—without you knowing. Your DEI initiatives get undermined by the translation layer.

Bias #2: Cultural Framing

Neutral phrases in one culture carry emotional weight in another.

“Family values” sounds political in English, neutral in Japanese.

“Individual freedom” reads straightforward in American English but carries libertarian overtones in European translations.

The impact? Subtle tone shifts change meaning entirely. What reads as straightforward in your source language lands as loaded in translation. Your global audience receives different emotional signals from the same message.

Bias #3: Western-Centric Datasets

Most AI training data comes from English or Western sources. Non-Western idioms, proverbs, and context get flattened or lost.

Try translating “添い遂げる” from Japanese or “जुगाड़” from Hindi.

The AI outputs literal approximations that strip away cultural meaning. Regional expressions get replaced with generic Western equivalents.

The impact: Your message loses cultural nuance. A Chinese proverb becomes generic advice. A Hindi idiom turns into literal nonsense. Your content feels foreign even to the target audience.

Bias #4: Religious and Political Context

AI engines soften or avoid terms tied to sensitive ideologies. They smooth over religious references. They neutralise political language.

Words like “jihad,” “crusade,” or “liberation” get sanitised. The algorithm decides what might cause controversy and adjusts accordingly. Your theological discussion becomes vague. Your political analysis loses its edge.

The impact: Your intent gets distorted. Critical context disappears. The AI decides what’s “safe” to translate—not you.

Bias #5: Formality and Tone Bias

Languages like Korean, Japanese, and Spanish live on formality levels. AI defaults to formal, always.

Your Instagram caption that says “Hey! Check this out” becomes stiff and distant in Korean. Your friendly customer email turns robotic in Japanese. The algorithm picks the safest register—formal—regardless of your brand voice or audience relationship.

The impact: Your casual brand voice becomes stiff. Everyday conversations sound robotic. You lose the tone that makes your content yours.

Bias #6: Socioeconomic Bias

Words like “worker” or “servant” shift tone based on the AI’s training data. Class-related phrases carry unintended connotations.

“Domestic worker” might translate neutrally in one language but take on demeaning overtones in another. “Blue collar” gets translated with associations the AI learned from decades of biased text data.

The impact: A neutral job description sounds demeaning in translation. Income-related language takes on baggage you didn’t intend. Your respect for labour gets lost in algorithmic associations.

Take back control

AI translation will keep making these calls—unless you guide it first.

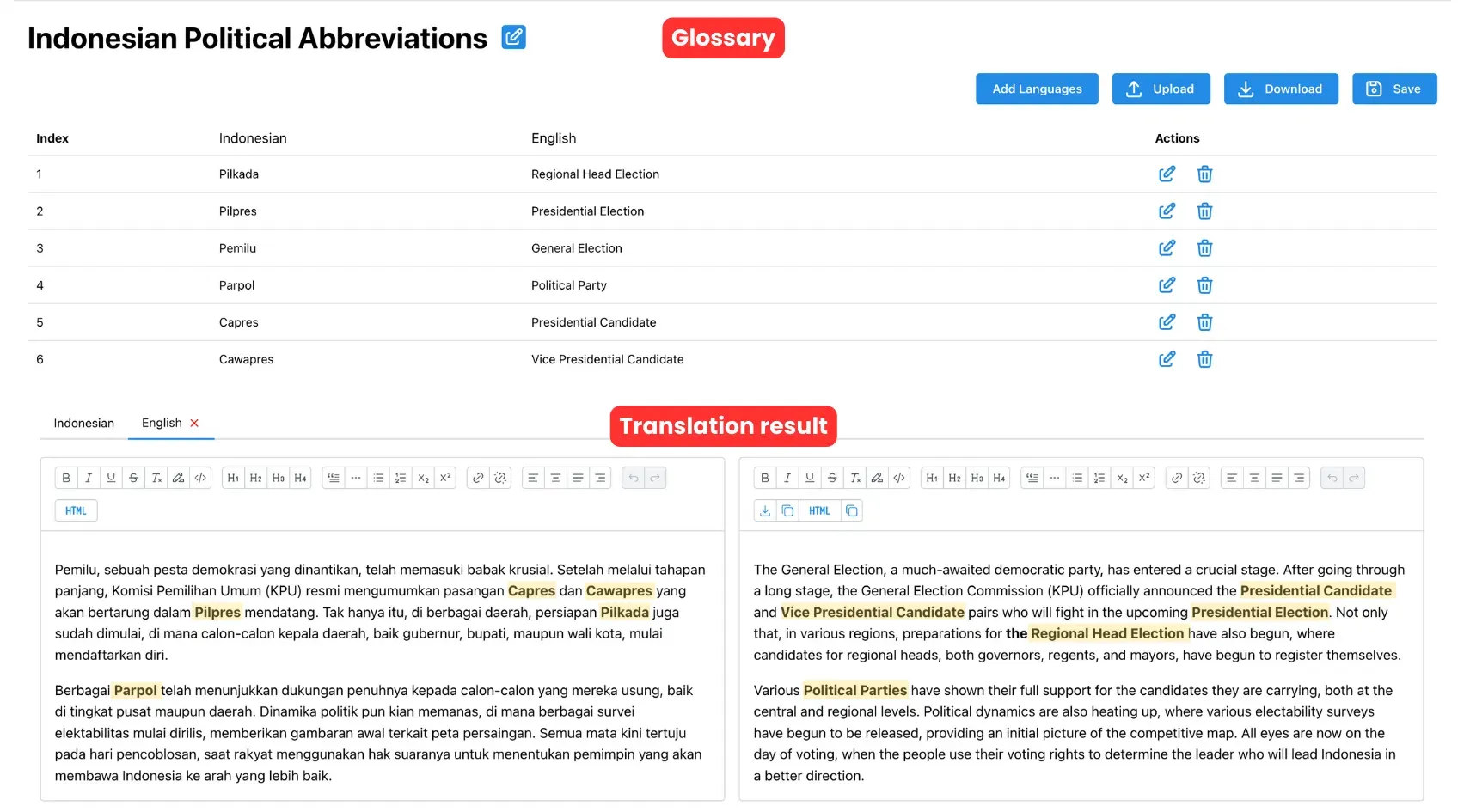

VideoTranslatorAI’s Custom Glossary lets teams define key terms, names, and context before translation happens.

You set the gender. You control the tone. You preserve the nuance. The AI follows your rules instead of its training data defaults.

Stop letting algorithms decide how your message lands. Start with the glossary.